9 Commandments of Intelligence Analysis: The Kent Analytic Doctrine

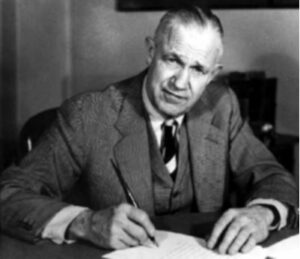

Sherman Kent worked as a historian and Yale professor who, during World War II, brought the rigorous methods of academia to the intelligence community. Post-war, he spent 17 years working at the CIA. Kent is commonly regarded as the founding father of intelligence analysis.

The Kent Analytic Doctrine dominates intelligence analysis today, but Kent never enumerated it himself. Rather, Frans Bax, founding Dean of the Kent School initially put Sherman Kent’s core principles to paper.

The doctrine consists of 9 pillars by which Kent operated. At first glance, they may seem like simple common sense. However, as humans, it’s easy to forget common sense when convenience beckons. Through the use of a model, intelligence analysis becomes repeatable, consistent, measurable, and more credible.

I’ll paraphrase each pillar of the code as described in Jack Davis’ November 2002 paper.

1. Focus on Policymaker Concerns

To be valuable, intelligence analysis needs to be help clients manage challenges. To have use managing challenges, it must be understandable to clients. And to be understandable, an analyst with knowledge of the client’s decision cycle and existing knowledge must tailor the analysis. Therefore, valuable intelligence focuses on concerns of its clients.

It’s important to note that this does not mean analysts should ignore concerns unknown to their clients. It simply implies that irrelevant, pedantic, or unusable analysis holds less value.

2. Avoidance of a Personal Policy Agenda

It is not the role of analysis to make recommendations or choices. That is the client’s job. Further, analysis should avoid adopting an adversarial agenda. Rather, analysis should provide support for the decision-making process as a reliable source of impartial information. This requires attention to many different points-of-view and outcomes.

3. Intellectual Rigor

Information used in the analytic process must be “rigorously evaluated for validity” and relevance (diagnosticity). This is done through a variety of methods, including but not limited to evaluation of data, consultation of experts, and careful assumption. When less-than-certain information factors into the analysis, it must be explicitly declared and accounted for.

4. Conscious Effort to Avoid Analytic Biases

Humans have inherent cognitive tendencies that, unchecked, will color their analyses. At every step in the process, analysts must take pains to challenge their assumptions. Some examples of common biases include groupthink, mirror imaging, target fixation.

In addition, anecdotal evidence – or “field exposure” – is only a supplement to analytical tradecraft.

5. Willingness to Consider Other Judgements

It’s necessary for analysts to make a conscious effort to consider judgements outside of their own. Dissent is welcome if paired with its raison d’être. The doctrine asserts that numerous points-of-view make a stronger, more resilient analysis product because of the diversity in expertise and organizational vantage points. Through review, analysts can refine their analysis, strengthen, clarify, or remove weak points, and consider new ideas.

6. Systematic Use of Outside Experts

Analysts must seek diverse opinions both inside and outside their organization. This includes the clients that employ them, “news media accounts and general and specialized journals”. Since this paper was published in 2002, there’s no mention of social media sentiment, but my hunch is that it would fit into this tenet.

Of course, these accounts (especially the latter) must be subject to the “intellectual rigor” central to Kent’s ideology. However, there’s no mention that such accounts should support the analyst’s opinion; in fact, the doctrine explicitly states that this may not be the case.

7. Collective Responsibility for Judgment

As used here, the meaning of “collective responsibility” carries significance to understand. The doctrine straightforwardly asserts that analysts “should represent and defend the appropriate corporate point of view”, in other words, toe the line.

This view more opaquely implies that failure falls on the shoulders of the entire agency, or at least the directorate, rather than the individuals directly involved with a judgment. This collective responsibility cultivates a culture that adheres tightly to established rules and procedures and does not proportionally reward risk-taking. These are not necessarily positive or negative aspects.

While this point speaks largely to organizational coordination, an understated requirement of such coordination is time. The analysis process takes time to refine its product because of the broad and nuanced perspectives that must be consulted, and because of the effort required to analyze each.

8. Effective communication of policy-support information and judgments

An intelligence product of any quality is worth nothing if communicated poorly. In the worst cases, poor delivery can even do harm. To label communication effective, analysts must make key points clear in a brief manner. Brevity is vital, as policymakers are busy and don’t have time or expertise to consider minutiae.

Opposite the value in brevity is value in clarity. Detail should be provided in a “carefully measured dose of detail” where its omission would create confusion.

Last, any uncertainty in the assessment should stem from the issue itself. Analysts can introduce endless uncertainty through the use of non-falsifiable judgements. Avoid such judgements.

9. Candid Admission of Mistakes

Naturally, providing falsifiable judgements means an analyst’s assessments are never guaranteed. When mistakes are made and an analyst is incorrect, the mistake provides an opportunity to learn. An analyst only learns a lesson after sincerely admitting that they made a mistake in the first place. Therefore, improvement relies upon the admission of mistakes.

Policymakers value this candor over backpedaling or excuse-making.